ON-DEVICE ARTIFICIAL INTELLIGENCE WITH QUALCOMM SNAPDRAGON

28. May 2019 | Planegg

Artificial intelligence is the growth opportunity of the current years.

For most products, intelligence is still calculated in the cloud today. These calculations are then sent to the devices and used there by the user.

The best-known example is probably Amazon Alexa. A permanent internet connection is still required for voice control.

The advantage of AI applications that are hosted in the cloud is access to much greater computing power and much larger amounts of data, which helps to improve the AI algorithms. And some live information is only available in the cloud (like weather forecast)

But this costs electricity and bandwidth and creates concerns about security and privacy.

For the professional sector, such as industrial IOT or autonomous driving, the challenges are even greater, since the requirements for latencies, reliability and security are increasing significantly.

As processors become more and more powerful, a large number of AI applications can be calculated directly on the devices today. In most cases, a combination of both technologies is used. Real-time applications and critical applications are computed on the devices and regularly updated with new information from the cloud.

This information can be simple updates but also algorithm improvements are exchanged among devices. However, user data is not sent directly to the cloud, which significantly improves data protection, latency and reliability.

And last but not least, bandwidth costs money and resources that can be saved.

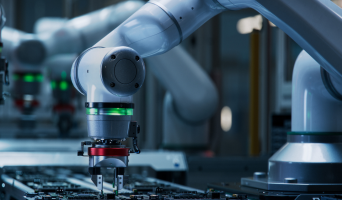

The trend is that devices in the IoT must become increasingly intelligent, as they must respond faster, consume less power and become more secure.

The challenge in transferring AI applications from the cloud to often mobile devices is matching the requirements for AI computing with the limitations on devices.

AI requires a lot of computing power that must be available. The more complex the applications become, the larger and more complicated the neural network models become.

These must be able to be processed in real time and be available continuously.

On the other hand, there are limitations on the devices. E.g. power and battery are limiting variables and advanced computing algorithms are performance-hungry.

These requirements are best met by exploiting the different strengths of different computing architectures.

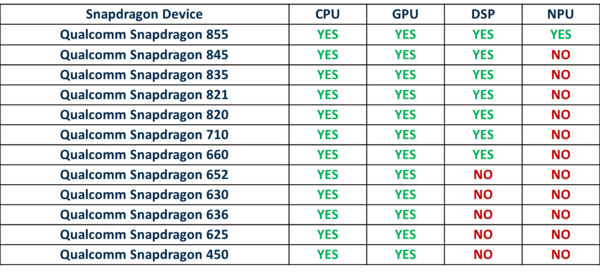

For Qualcomm Snapdragon systems, the required computing power is distributed among the different chip components CPU, GPU and DSP. With the very latest chips, an NPU is also added, which is specially designed for the calculation of AI applications. This offers enormous advantages in terms of computing speed, real-time and power consumption.

Qualcomm provides the Snapdragon Neural Processing Engine (SNPE) that is a software accelerated runtime for the execution of deep neural networks (for inference).

The SNPE SDK provides the necessary tools for the development of neural networks running on Snapdragon technology.

WITH SNPE, USERS CAN:

- Convert Caffe/Caffe2, ONNX, and TensorFlow models to a SNPE deep learning container (DLC) file

- Quantize DLC files to 16/8 bit C for execution on the Qualcomm® HexagonTM DSP

- Integrate a network into applications and other code via C++ or Java

- Execute the network on the Snapdragon CPU, the Qualcomm® AdrenoTM GPU, or the Hexagon DSP with HVX* support

- Execute an arbitrarily deep neural network

- Debug the network model execution on x86 Ubuntu Linux

- Debug and analyze the performance of the network model with SNPE tools

- Benchmark a network model for different targets

The software is created in such a way that it is optimally distributed to the Snapdragon processor technologies CPU, GPU, and DSP with the Hexagon Vector eXtensions.

Today's common frameworks for the creation of neural networks such as TensorFlo and Caffe 2 are supported.

In addition, a variety of optimization and debugging tools are available.

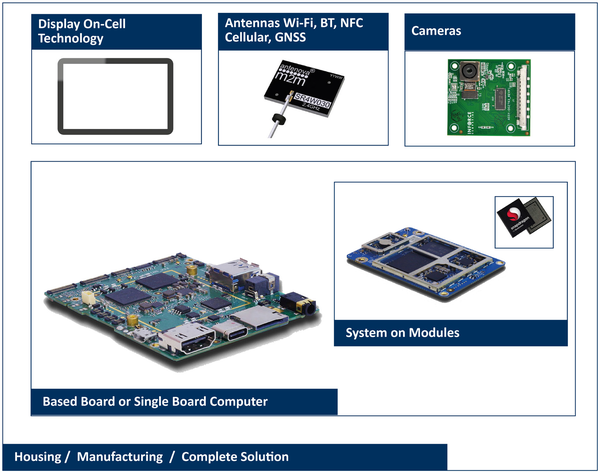

Atlantik provides platforms for various applications. These offer application development kits that make development much easier and allow the AI development without having to overcome the hurdles for AI algorithm design. Instead, a large number of examples and example code are available.

ATLANTIK OFFERS ON-DEVICE AI TECHNOLOGY & SERVICE PROVIDER

- AI Technology & Services

- AI Algorithm Development, Optimization, Training

- AI Compute Modules: 625/820/835/660/845

- System & Solution

- Smart Retail, Smart Manufacturing, Smart Car, etc.

- Development of complete solutions